In the previous post, we looked at some of the basics steps for writing tests for our REST API in ASP.NET Core. We learned how to self-host our API, send HTTP requests to execute our test, customize logging to report details of these tests, and customize our settings. More specifically, we showed how to replace our external dependencies, such as a database, through dependency injection with an in-memory version that used a list. That post was a small preview of what is to come and what we will be addressing in this post. With the techniques from the previous post in place, we can run our application as deployed in a test without any external calls. By replacing these external calls with TestDoubles, we start to create a boundary and isolate our API. These kinds of tests are more known as component tests. In this post, the second part of a three-part series, we will go into more depth about component tests. We will talk about:

- Introduction about component tests

- Overview of NuGet packages we are using

- Replacing integrations with TestDoubles

We will go into depth about the kind of integrations we will be replacing with a TestDouble. All of this, we will do with the help of dependency injection. Some examples are:

- EntityFramework: Replace

DbContextby using the in-memory versions - HTTP integration: Replace

HttpClientwith a special handler that simulates our requests.

And finally, we will put all these examples together into a single component test. Let us get to it because there is a lot to discuss.

Introduction

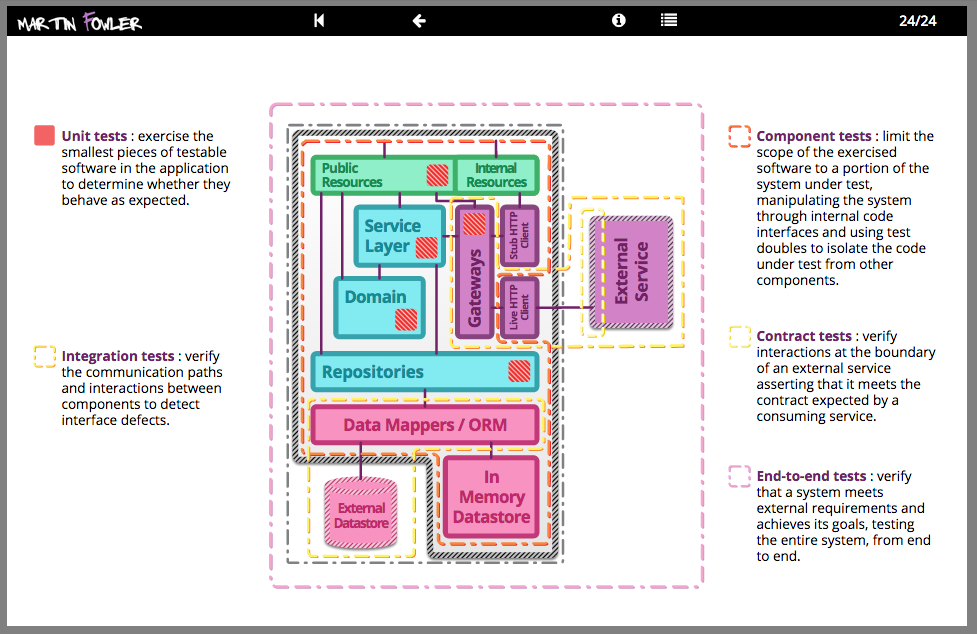

To understand what we are going to do in this post, it is best to look at the definition of a component test. I very much like the description and the presentation from Martin Fowler and Toby Clemson - Testing Strategies in a Microservice Architecture. This post outlines everything about this topic. It serves as a precise definition, one that we will apply in our examples, and serves as a thread through our posts. I highly recommend reading it.

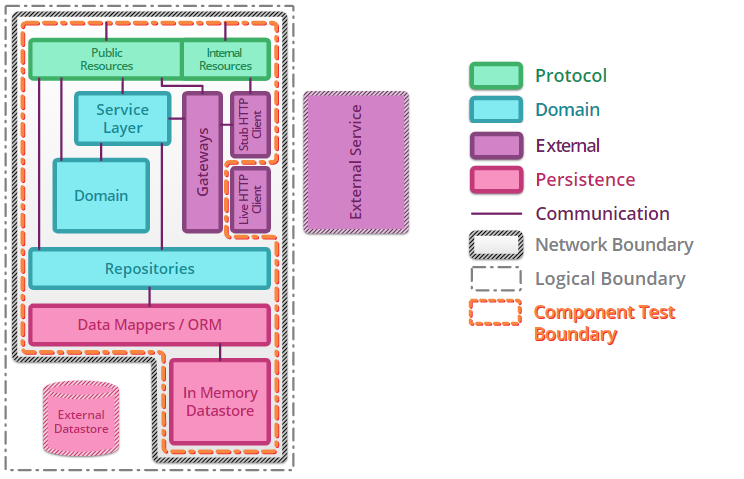

A component test is a test that limits the scope of the exercised software to a portion of the system under test...where component tests deliberately neglect parts of the system outside the scope of the test. - Toby Clemson

Looking at the definition and the above image, we can quickly see the boundary of the component test. The goal of the component test is to limit the scope to only your code. It places any external parts, such as a database or an HTTP service, outside the system. But there could be many more moving pieces in your application. Some other examples we've identified in our application are things like caches, files, streams, message publisher/subscribers, and many more. In practice, when writing these tests yourself, you'll have to identify these "parts of the system" that are outside of the scope of your test.

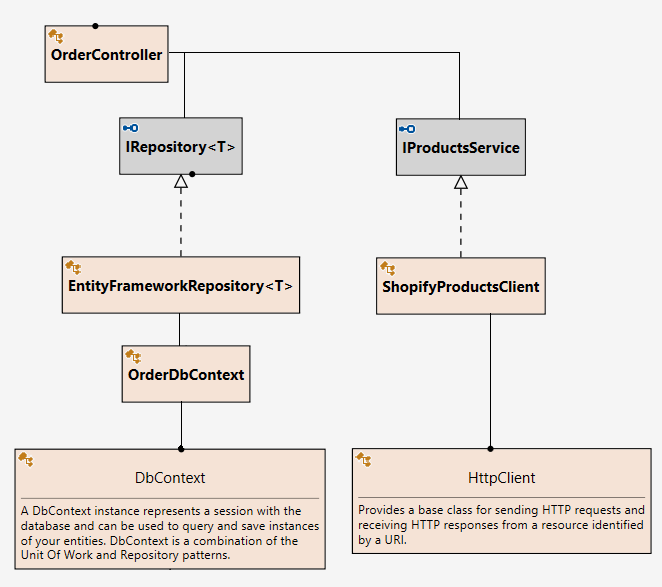

Once identified, we can look at the second part of the definition. This part is about "neglecting" these external dependencies. With the help of dependency injection (as you might have seen in the previous post), we will replace part of our code with a TestDouble. But the question is, which classes do we have to replace? Let's take a look at the class structures.

As an example, take the above class diagram. Here we need to find the lowest level of integration to replace with dependency injection. You can see that the implementation of the ShopifyProductsClient has a dependency on the HttpClient. It uses this class to add additional headers such as an API key and makes sure the response is valid. Furthermore, this client is exposed through the abstraction of an interface called IProductsService. The implementation of this service is not at the lowest level. Suppose you replace the interface with a dummy implementation returning a single product. When doing so in your component test, you are only replacing part of your code and you are not really testing the implementation of the service. It would be better if you have a way to control the HttpClient and its respective requests and responses. This kind of control will allow you to simulate how an external API call such as Shopify responds. You can assert that your microservice adds the API key to the headers and handles the happy flow well. This method also allows you to test how your service responds resiliently to an HTTP 4XX or 5XX response.

Some NuGet packages are we using...

Moq

Moq is a package that, in their own words, "is intended to be simple to use, strongly typed (no magic strings!, and therefore full compiler-verified and refactoring-friendly) and minimalistic (while still fully functional!)". I've worked with Moq for a very long time and it suits most of my needs when I need to inject an abstraction into my SystemUnderTest. Moq can serve both as a Mock and a Spy at the same time. On one side it requires you to set up your expectations for the class/interface that is being called and respond in a certain way. On the other side, it also allows you to verify invocations such as how many times it was called, with what parameters, etc, etc.

var mock = new Mock<IFoo>();

// basic example

mock.Setup(foo => foo.DoSomething("ping")).Returns(true);

// access invocation arguments when returning a value

mock.Setup(x => x.DoSomethingStringy(It.IsAny<string>()))

.Returns((string s) => s.ToLower());

// throwing exceptions when invoked with specific parameters

mock.Setup(foo => foo.DoSomething("reset")).Throws<InvalidOperationException>();

mock.Setup(foo => foo.DoSomething("")).Throws(new ArgumentException("command"));AutoFixture

AutoFixture is a library that aims to simplify the setup for your tests. Generally speaking, when writing a unit test, you need to write some code to arrange some data. Complex objects like Customer, Order or maybe an even more complex structure. When you need large datasets or POCO's with a high number amount of properties, it is very tedious to set these tests up. I often write a factory class that has some logic to construct data for my domain. But when I'm not interested in what the data is, I used AutoFixture.

[Fact]

public void IntroductoryTest()

{

// Arrange

Fixture fixture = new Fixture();

Customer sut = fixture.Create<Customer>();

// Act

int result = sut.GetNumberOfOrders();

// Assert

Assert.Equal(expectedNumber, result);

}MockHttp

MockHttp is a testing library for Microsoft's HttpClient class. It allows stubbed responses to be configured for pre-defined HTTP requests and can be used to test your application's service layer. Looking at the aforementioned architecture image, this will help us to replace/intercept "Live HTTP" clients with "Stubbed HTTP" clients. It's great when there are one or multiple HttpClient integrations in your application.

Most of the aforementioned packages will be used in the next examples.

Replacing integrations with TestDoubles

By instantiating the full microservice in-memory using in-memory test doubles and datastores it is possible to write component tests that do not touch the network whatsoever. This can lead to faster test execution times and minimizes the number of moving parts reducing build complexity. However, it also means that the artifact being tested has to be altered for testing purposes to allow it to start up in a 'test' mode. Dependency injection frameworks can help to achieve this by wiring the application differently based on configuration provided at start-up time.

Not much more than the above quote needs to be said about our component tests. The tests run incredibly fast and give us a good idea of how the application will behave. I've been building mostly backend APIs, and having the possibility to assert the outcome of an individual API regressively is a big plus for our development team. In the following paragraphs, we will put everything together. Per "integration", I'll go over an example on how to replace the implementation to support a component test. Additionally, you will find the source code on the bottom of the post in the resources section.

We have examples for:

- Entity Framework

- HttpClient

While these are are only few and limited examples, these should be enough for your first main cases. But for other integrations, we generally found that they offer docker containers. This will be a subject for a later post.

Replacing the (relational) database

Before going into too much detail, it is important to understand that there are a number of trade-offs when component testing your database/repository code. Below you will find a number of examples resembling the pros and cons from the documentation mentioned here: Testing code that uses EF Core. Essentially, depending on your type of TestDouble, there is a different level of integration with SQL/Entity Framework.

- InMemoryRepository: no integration with entity framework or SQL. Just a

self-made class with a list or dictionary - Entity Framework in-memory: No support for transactions or raw SQL

- SQLite: Closest to database interaction, but not production worthy

Depending on your scenario or component test, you can exchange these options and choose to adopt one or multiple solutions. In the example source code, you will find all three examples.

InMemoryRepository (No integration)

Assuming you have some sort of IRepository interface, we can use dependency injection to replace from our previous post to provide an implementation with a version that uses a List<T> or a Dictionary<TKey,TValue>. There are a couple of advantages to this scenario. First, you don't have to worry about setting up the data. If you have a complex database, you might need to insert dependent data before you have it in the desired state for your test. Secondly, the "ConfigureTestServices" is relatively simple.

.ConfigureTestServices(services =>

{

services.AddSingleton(typeof(IRepository<>), typeof(InMemoryRepository<>));

});However, the big disadvantage is the component test never covers your Object-relational mapping (ORM) framework. Ideally, it is much better to run against an actual database to test the integration as it is closer to the deployed scenario and can identify early integration mistakes. The challenge, in this case, is that it must also be able to run during your CI/CD pipelines. Furthermore, you need to make sure that if you run your component tests in parallel, that they don't conflict with one or another. Using an in-memory database may or may not be available to you depending on the ORM framework you use.

Using entity framework in-memory (Low integration)

In our case, EntityFramework does support this with Microsoft.EntityFrameworkCore.InMemory NuGet package. The setup is simple but switching database providers requires some additional Dependency Injection tricks. When invoking the services.AddDbContext<MyDbContext>(), some additional steps are done by EF. It registers two services.

- A service to resolve

DbContext - A service/factory method to resolve

DbContextOptions<MyDbContext>.

The preferred database provider is registered in these options. Thus, in order to switch the database provider from your default to the in-memory version, we need to override these options. Be aware that any other configuration you might have done, like enabling detailed errors, are also is overridden and needs to be reconfigured. Below is an example that uses the EntityFramework in-memory database.

webHostBuilder

.ConfigureTestServices(services =>

{

// Depending on the LifetimeScope of your options, it might either must be Scoped or Singleton. Default it's Scoped

services.AddSingleton((serviceProvider) =>

{

var optionsBuilder = new DbContextOptionsBuilder<OrderDbContext>().UseInMemoryDatabase("orders");

return optionsBuilder.Options;

});

});Using SQLite in-memory (Medium integration)

For the SQLite option, we need to do a similar thing with our dependency injection. As you can see in the above example, configuring a different database provider is very easy, but for SQLite, we need to implement the IDisposable interface because we are working with external resources such as a database connection. To achieve this, you typically use a Setup/Teardown pattern. xUnit uses the UnitTest class constructor as "Setup", and the IDisposable.Dispose will be the "Teardown".

public class SqlLiteComponentTest : IDisposable

{

private readonly DbConnection _connection;

public SqlLiteComponentTest(ITestOutputHelper testOutputHelper)

{

// Ommitted

_connection = CreateInMemoryDatabase();

}

private void CustomizeWebHostBuilder(IWebHostBuilder webHostBuilder)

{

webHostBuilder

.ConfigureTestServices(services =>

{

services.AddSingleton((serviceProvider) =>

{

var optionsBuilder = new DbContextOptionsBuilder<OrderDbContext>()

.UseSqlite(_connection);

return optionsBuilder.Options;

});

});

}

private static DbConnection CreateInMemoryDatabase()

{

var connection = new SqliteConnection("Filename=:memory:");

connection.Open();

return connection;

}

// Ommitted

public void Dispose()

{

_connection.Dispose();

}

}Again, it is important to note that depending on your scenario, you might choose one of the above options for your own component tests. Each choice has a different trade-off, as they work differently and provide a different level of integration to the database. But as shown here, getting started with these options are equally as simple, and they all work interchangeably.

Replacing HttpClients with a MockHttpHandler

Another low level of dependency is your HttpClient. In our example application from the previous blog, we have created a service that integrates with Shopify. Now we can write a test that calls our order API, but that would fail because it tries to connect with the Shopify service. We need a way to work to intercept this, and we will be doing this with the MockHttp library.

Before writing a test, I generally use Postman to get an example requests and responses, and I store these inside a JSON file. With the help of these files, you can quickly set up a request in your code (specifying HttpMethod, QueryString and Headers) and returning a response the same way the API does. Below you'll see an example of such a setup.

MockHttpMessageHandler

.When(HttpMethod.Get, "https://shopify/api/v1/products/admin/api/2021-07/products/APPLE_IPHONE.json")

.WithHeaders(new Dictionary<string, string>

{

["X-Shopify-Access-Token"] = "secret"

})

.Respond(MediaTypeNames.Application.Json, File.ReadAllText("Shopify/Examples/APPLE_IPHONE.json"));This is a convenient way of making component tests for your HTTP integrations.

Furthermore, in my personal experience, it has helped our team to even reproduce bugs. There were certain cases that we did not foresee, and through diagnosing and mimicking the request/response in a component test, we were able to quickly identify and resolve the bug, complementing our code base with another component test.

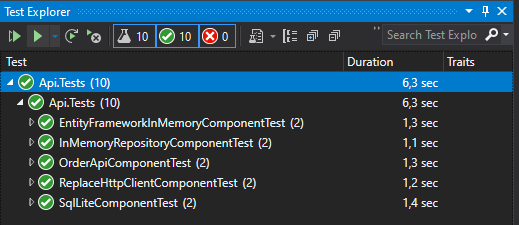

The component test

Bringing all this together we can create the following component test. This test first asserts that there are no orders, then it creates an order, and last but not least, it verifies that the order was created. This component test thus asserts that the HTTP integration works with Shopify. Additionally, it asserts our database is storing the order and we can retrieve it with all the correct details.

[Fact]

public async Task ComponentTest()

{

// Arrange

MockHttpMessageHandler

.When(HttpMethod.Get, "https://shopify/api/v1/products/admin/api/2021-07/products/APPLE_IPHONE.json")

.WithHeaders(new Dictionary<string, string>

{

["X-Shopify-Access-Token"] = "secret"

})

.Respond(MediaTypeNames.Application.Json, File.ReadAllText("Shopify/Examples/APPLE_IPHONE.json"));

// Act #1

HttpResponseMessage getAllResponse = await SystemUnderTest.GetAsync("/api/v1/orders");

var orders = await getAllResponse.Content.ReadFromJsonAsync<Order[]>();

orders.Length.Should().Be(0);

// Act #2

HttpResponseMessage createResponse = await SystemUnderTest.PostAsync(

"/api/v1/orders",

JsonContent.Create(new CreateOrderDto()

{

ProductNumbers = new[] { "APPLE_IPHONE" },

UserId = Guid.NewGuid(),

TotalAmount = 495m,

}));

// Act #3

getAllResponse = await SystemUnderTest.GetAsync("/api/v1/orders");

// Assert

createResponse.StatusCode.Should().Be(HttpStatusCode.OK);

getAllResponse.StatusCode.Should().Be(HttpStatusCode.OK);

orders = await getAllResponse.Content.ReadFromJsonAsync<Order[]>();

orders.Length.Should().Be(1);

}Conclusion

And that is we can create component tests. To summarize, we have done a couple of things.

- We have talked about component tests and what they are

- We showed some NuGet packages that can help you with these component tests

- Replaced an HTTP integration with examples

- Replaced EntityFramework to run different in-memory modes

- Brought all the above together into a single component test

Writing component tests takes longer but the advantage is that you can create fine-grained tests that match some use cases or scenarios. This helped me and my co-workers to develop more reliable applications. In particular, for us, it became easier to reproduce bugs.

The scope of this post was to show you, how to create component tests. While they are great as a starting point to get a high "code coverage" and confidence in your application, there are still many more libraries that you can use to solve your functional or technical requirements. For example, look at Redis, RabbitMq, ElasticSearch, MongoDB, etc. However, these libraries are harder to replace with a TestDouble. Instead, many of these libraries, when working in cloud-agnostic environments, provide a docker container implementation. In the next blog, I'm going to show you how to spin up Docker Containers during your xUnit test so that we can use containers to provide an actual integration. These new kinds of tests then become integration tests. I would love to hear what your thoughts are.

By combining unit, integration and component testing, we are able to achieve high coverage of the modules that make up a microservice and can be sure that the microservice correctly implements the required business logic. - Toby Clemson

Next post

Resources / Credits

Member discussion